文本摘要

本教程将演示如何使用内置链和 LangGraph 进行文本摘要。

该页面的 先前版本 展示了传统的链:StuffDocumentsChain、MapReduceDocumentsChain 和 RefineDocumentsChain。有关使用这些抽象以及与本教程所示方法进行比较的信息,请参阅此处。

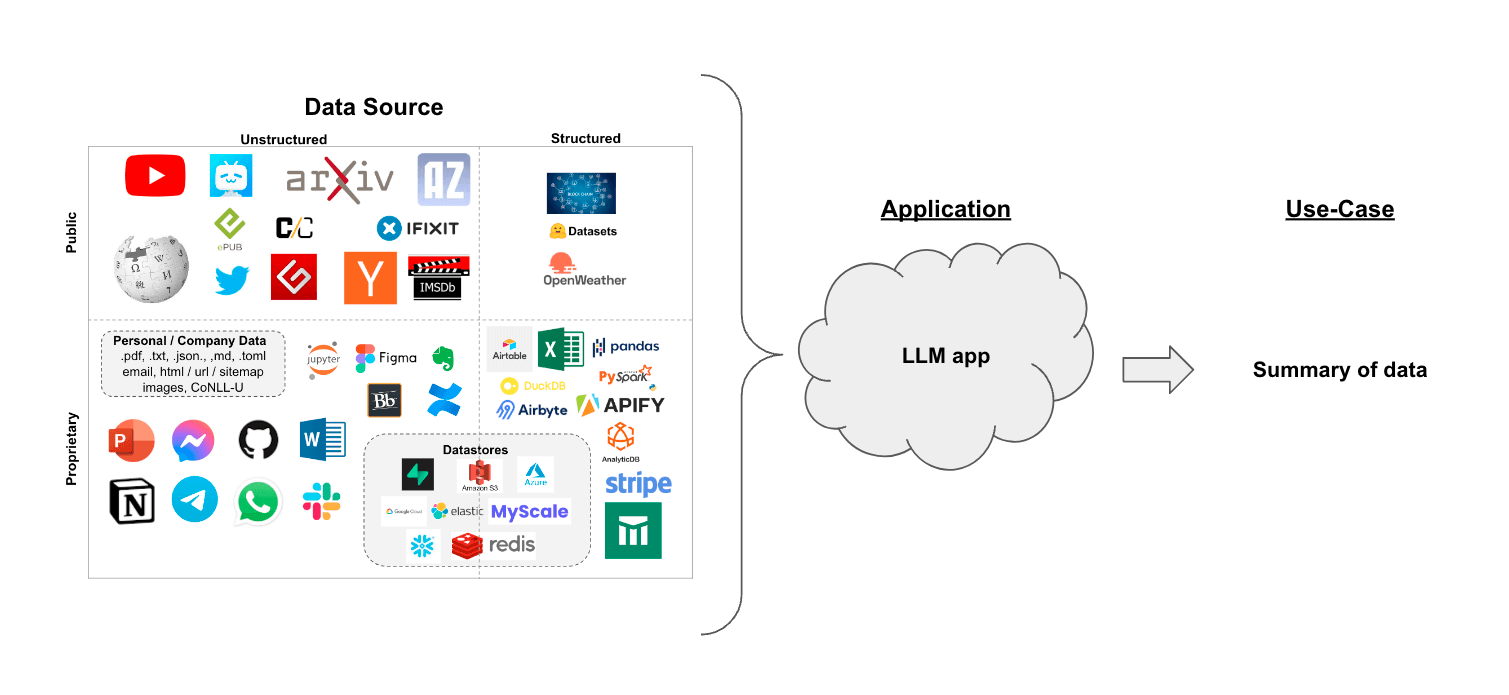

假设您有一组文档(PDF、Notion 页面、客户问题等),并且想要对其内容进行摘要。

鉴于大型语言模型(LLM)在理解和综合文本方面的熟练程度,它们是此任务的绝佳工具。

在 检索增强生成 的上下文中,文本摘要有助于提炼大量检索到的文档中的信息,为 LLM 提供上下文。

在本指南中,我们将介绍如何使用 LLM 对多个文档中的内容进行摘要。

概念

我们将涵盖的概念包括:

-

使用 语言模型。

-

使用 文档加载器,特别是 WebBaseLoader 从 HTML 网页加载内容。

-

总结或以其他方式组合文档的两种方法。

- Stuff,它只是将文档连接到一个提示中;

- Map-reduce,适用于大量文档。它将文档拆分成批次,对这些批次进行总结,然后总结这些总结。

关于这些策略和其他策略(包括 迭代细化)的更短、更有针对性的指南,可以在 操作指南 中找到。

设置

Jupyter Notebook

本指南(以及文档中的其他大部分指南)使用 Jupyter notebooks,并假定读者也使用它。Jupyter notebooks 非常适合学习如何使用 LLM 系统,因为事情常常会出错(意外输出、API 下线等),而在交互式环境中进行指南学习是更好地理解它们的好方法。

本教程及其他教程可能最方便在 Jupyter notebook 中运行。有关如何安装的说明,请参见此处。

安装

要安装 LangChain,请运行:

- Pip

- Conda

pip install langchain

conda install langchain -c conda-forge

更多详情,请参阅我们的 安装指南。

LangSmith

您使用 LangChain 构建的许多应用程序将��包含多个步骤以及对 LLM 的多次调用。 随着这些应用程序变得越来越复杂,能够精确检查链或代理内部发生的事情变得至关重要。 做到这一点的最佳方法是使用 LangSmith。

在上面的链接注册后,请确保设置您的环境变量以开始记录跟踪:

export LANGSMITH_TRACING="true"

export LANGSMITH_API_KEY="..."

或者,如果您在 notebook 中,可以使用以下方式设置它们:

import getpass

import os

os.environ["LANGSMITH_TRACING"] = "true"

os.environ["LANGSMITH_API_KEY"] = getpass.getpass()

概述

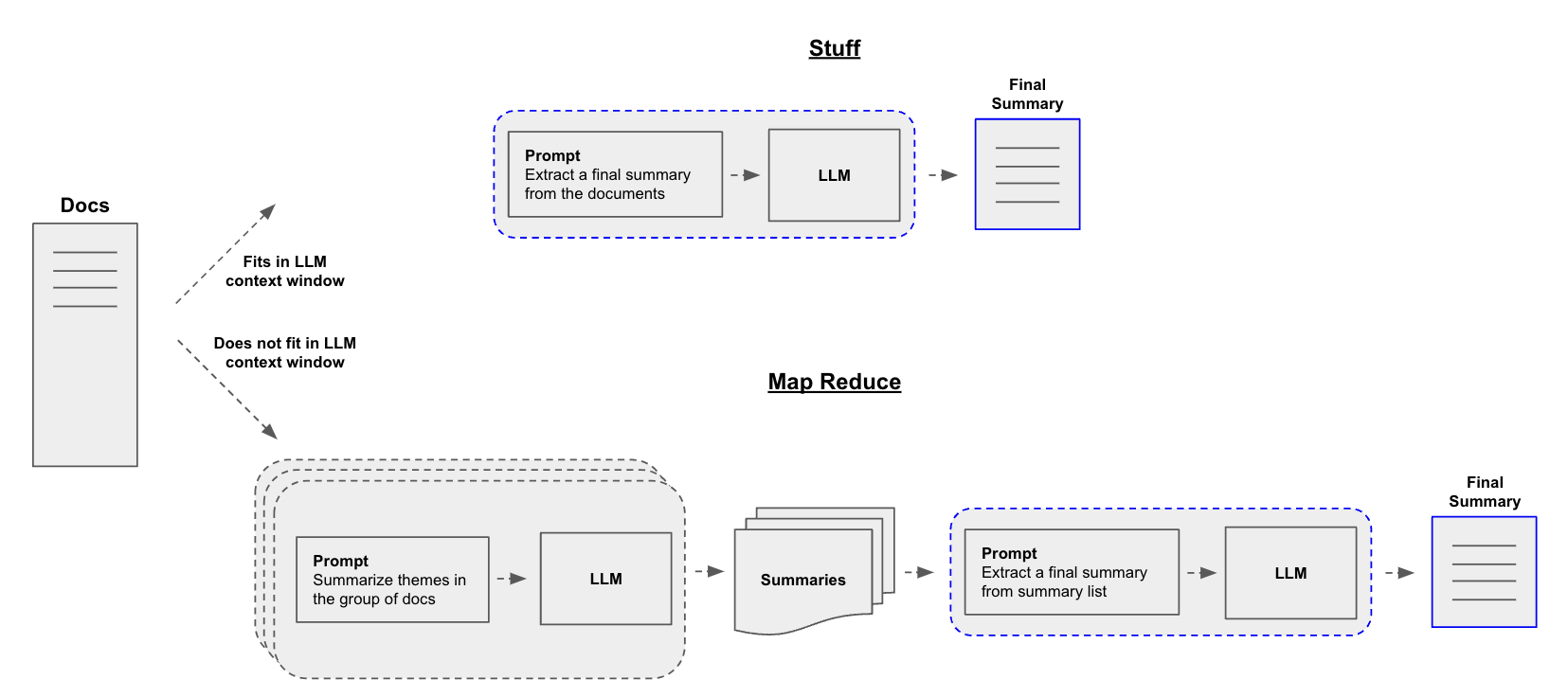

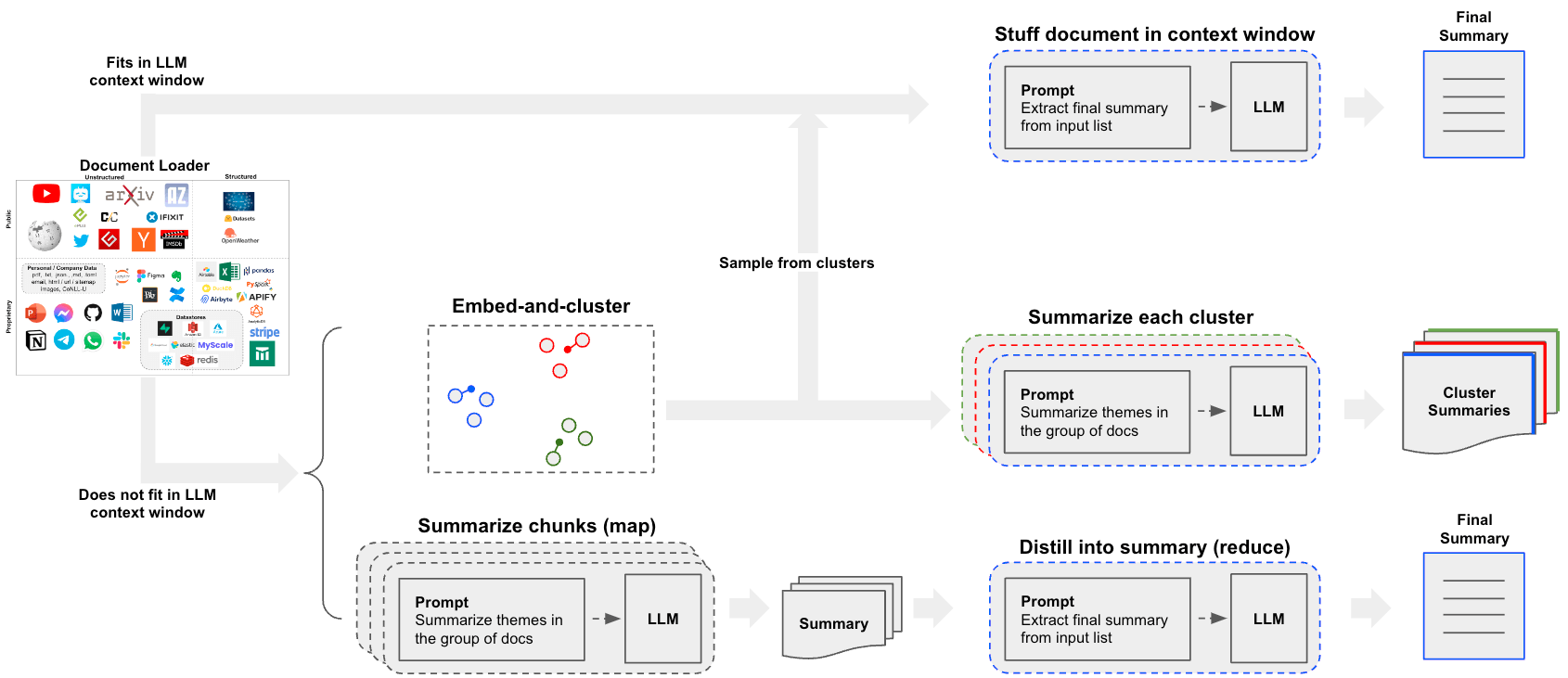

构建一个摘要器的一个核心问题是如何将你的文档传递到 LLM 的上下文窗口中。这有两种常见的方法:

-

Stuff:简单地将所有文档“塞进”一个提示符中。这是最简单的方法(有关用于此方法的create_stuff_documents_chain构造函数的更多信息,请参见此处)。 -

Map-reduce:在“map”步骤中单独总结每个文档,然后将这些摘要“reduce”成最终摘要(有关用于此方法的MapReduceDocumentsChain的更多信息,请参见此处)。

请注意,当子文档的理解不依赖于先前的上下文时,map-reduce 方法尤其有效。例如,在总结大量较短文档的语料库时。在其他情况下,例如总结一部小说或具有内在顺序的文本主体时,迭代改进 可能更有效。

设置

首先设置环境变量并安装包:

%pip install --upgrade --quiet tiktoken langchain langgraph beautifulsoup4 langchain-community

# Set env var OPENAI_API_KEY or load from a .env file

# import dotenv

# dotenv.load_dotenv()

import os

os.environ["LANGSMITH_TRACING"] = "true"

首先,我们加载文档。我们将使用 WebBaseLoader 来加载一篇博客文章:

from langchain_community.document_loaders import WebBaseLoader

loader = WebBaseLoader("https://lilianweng.github.io/posts/2023-06-23-agent/")

docs = loader.load()

接下来,让我们选择一个 LLM:

pip install -qU "langchain[google-genai]"

import getpass

import os

if not os.environ.get("GOOGLE_API_KEY"):

os.environ["GOOGLE_API_KEY"] = getpass.getpass("Enter API key for Google Gemini: ")

from langchain.chat_models import init_chat_model

llm = init_chat_model("gemini-2.0-flash", model_provider="google_genai")

Stuff:在单次 LLM 调用中进行总结

我们可以使用 create_stuff_documents_chain,尤其是在使用更大上下文窗口的模型时,例如:

- 128k token 的 OpenAI

gpt-4o - 200k token 的 Anthropic

claude-3-5-sonnet-20240620

该链将接收一个文档列表,将它们全部插入到提示中,然后将该提示传递给 LLM:

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain.chains.llm import LLMChain

from langchain_core.prompts import ChatPromptTemplate

# Define prompt

prompt = ChatPromptTemplate.from_messages(

[("system", "Write a concise summary of the following:\\n\\n{context}")]

)

# Instantiate chain

chain = create_stuff_documents_chain(llm, prompt)

# Invoke chain

result = chain.invoke({"context": docs})

print(result)

The article "LLM Powered Autonomous Agents" by Lilian Weng discusses the development and capabilities of autonomous agents powered by large language models (LLMs). It outlines a system architecture that includes three main components: Planning, Memory, and Tool Use.

1. **Planning** involves task decomposition, where complex tasks are broken down into manageable subgoals, and self-reflection, allowing agents to learn from past actions to improve future performance. Techniques like Chain of Thought (CoT) and Tree of Thoughts (ToT) are highlighted for enhancing reasoning and planning.

2. **Memory** is categorized into short-term and long-term memory, with mechanisms for fast retrieval using Maximum Inner Product Search (MIPS) algorithms. This allows agents to retain and recall information effectively.

3. **Tool Use** enables agents to interact with external APIs and tools, enhancing their capabilities beyond the limitations of their training data. Examples include MRKL systems and frameworks like HuggingGPT, which facilitate task planning and execution.

The article also addresses challenges such as finite context length, difficulties in long-term planning, and the reliability of natural language interfaces. It concludes with case studies demonstrating the practical applications of these concepts in scientific discovery and interactive simulations. Overall, the article emphasizes the potential of LLMs as powerful problem solvers in autonomous agent systems.

流式传输

请注意,我们也可以逐个 token 流式传输结果:

for token in chain.stream({"context": docs}):

print(token, end="|")

|The| article| "|LL|M| Powered| Autonomous| Agents|"| by| Lil|ian| W|eng| discusses| the| development| and| capabilities| of| autonomous| agents| powered| by| large| language| models| (|LL|Ms|).| It| outlines| a| system| architecture| that| includes| three| main| components|:| Planning|,| Memory|,| and| Tool| Use|.|

|1|.| **|Planning|**| involves| task| decomposition|,| where| complex| tasks| are| broken| down| into| manageable| sub|go|als|,| and| self|-ref|lection|,| allowing| agents| to| learn| from| past| actions| to| improve| future| performance|.| Techniques| like| Chain| of| Thought| (|Co|T|)| and| Tree| of| Thoughts| (|To|T|)| are| highlighted| for| enhancing| reasoning| and| planning|.

|2|.| **|Memory|**| is| categorized| into| short|-term| and| long|-term| memory|,| with| mechanisms| for| fast| retrieval| using| Maximum| Inner| Product| Search| (|M|IPS|)| algorithms|.| This| allows| agents| to| retain| and| recall| information| effectively|.

|3|.| **|Tool| Use|**| emphasizes| the| integration| of| external| APIs| and| tools| to| extend| the| capabilities| of| L|LM|s|,| enabling| them| to| perform| tasks| beyond| their| inherent| limitations|.| Examples| include| MR|KL| systems| and| frameworks| like| Hug|ging|GPT|,| which| facilitate| task| planning| and| execution|.

|The| article| also| addresses| challenges| such| as| finite| context| length|,| difficulties| in| long|-term| planning|,| and| the| reliability| of| natural| language| interfaces|.| It| concludes| with| case| studies| demonstrating| the| practical| applications| of| L|LM|-powered| agents| in| scientific| discovery| and| interactive| simulations|.| Overall|,| the| piece| illustrates| the| potential| of| L|LM|s| as| general| problem| sol|vers| and| their| evolving| role| in| autonomous| systems|.||

深入了解

- 你可以轻松自定义 prompt。

- 你可以通过

llm参数轻松尝试不同的 LLM(例如,Claude)。

Map-Reduce:通过并行化总结长文本

我们来详细解析一下 Map-Reduce 方法。为此,我们将首先使用 LLM 将每个文档映射到单独的摘要。然后,我们将把这些摘要进行 Reduce(归约)或合并,形成一个单一的全局摘要。

请注意,Map 步骤通常是在输入文档上并行执行的。

构建在 langchain-core 之上的 LangGraph 支持 Map-Reduce 工作流,非常适合解决这个问题:

- LangGraph 允许流式传输各个步骤(例如连续的摘要生成),从而提供对执行的更大�控制;

- LangGraph 的 检查点 支持错误恢复、与人工干预工作流的扩展,以及更轻松地集成到对话式应用程序中。

- 正如我们下面将看到的,LangGraph 的实现易于修改和扩展。

Map

首先,我们来定义与 Map 步骤相关的提示。我们可以使用与上面 stuff 方法相同的摘要提示:

from langchain_core.prompts import ChatPromptTemplate

map_prompt = ChatPromptTemplate.from_messages(

[("system", "Write a concise summary of the following:\\n\\n{context}")]

)

我们也可以使用 Prompt Hub 来存储和获取 prompt。

这将与你的 LangSmith API 密钥 配合使用。

例如,在此处查看 map prompt:https://smith.langchain.com/hub/rlm/map-prompt。

from langchain import hub

map_prompt = hub.pull("rlm/map-prompt")

Reduce

我们还定义了一个 prompt,它接收文档映射结果并将其精简为单一输出。

# Also available via the hub: `hub.pull("rlm/reduce-prompt")`

reduce_template = """

The following is a set of summaries:

{docs}

Take these and distill it into a final, consolidated summary

of the main themes.

"""

reduce_prompt = ChatPromptTemplate([("human", reduce_template)])

通过 LangGraph 进行编排

下面我们实现一个简单的应用程序,该应用程序将对文档列表执行摘要操作,然后使用上述提示进行归约。

当文本相对于 LLM 的上下文窗口较长时,Map-reduce 流程尤其有用。对于长文本,我们需要一种机制来确保归约步骤中要摘要的上下文不超过模型的上下文窗口大小。在这里,我们实现摘要的递归“折叠”:输入根据 token 限制进行分区,并为分区生成摘要。此步骤将重复进行,直到摘要的总长度在所需的限制内,从而能够摘要任意长度的文本。

首先,我们将博客文章分块成要映射的较小的“子文档”:

from langchain_text_splitters import CharacterTextSplitter

text_splitter = CharacterTextSplitter.from_tiktoken_encoder(

chunk_size=1000, chunk_overlap=0

)

split_docs = text_splitter.split_documents(docs)

print(f"Generated {len(split_docs)} documents.")

Created a chunk of size 1003, which is longer than the specified 1000

``````output

Generated 14 documents.

接下来,我们定义图。请注意,我们将最大 token 长度定义为人为设定的 1,000 个 token,以说明“折叠”步骤。

import operator

from typing import Annotated, List, Literal, TypedDict

from langchain.chains.combine_documents.reduce import (

acollapse_docs,

split_list_of_docs,

)

from langchain_core.documents import Document

from langgraph.constants import Send

from langgraph.graph import END, START, StateGraph

token_max = 1000

def length_function(documents: List[Document]) -> int:

"""Get number of tokens for input contents."""

return sum(llm.get_num_tokens(doc.page_content) for doc in documents)

# This will be the overall state of the main graph.

# It will contain the input document contents, corresponding

# summaries, and a final summary.

class OverallState(TypedDict):

# Notice here we use the operator.add

# This is because we want combine all the summaries we generate

# from individual nodes back into one list - this is essentially

# the "reduce" part

contents: List[str]

summaries: Annotated[list, operator.add]

collapsed_summaries: List[Document]

final_summary: str

# This will be the state of the node that we will "map" all

# documents to in order to generate summaries

class SummaryState(TypedDict):

content: str

# Here we generate a summary, given a document

async def generate_summary(state: SummaryState):

prompt = map_prompt.invoke(state["content"])

response = await llm.ainvoke(prompt)

return {"summaries": [response.content]}

# Here we define the logic to map out over the documents

# We will use this an edge in the graph

def map_summaries(state: OverallState):

# We will return a list of `Send` objects

# Each `Send` object consists of the name of a node in the graph

# as well as the state to send to that node

return [

Send("generate_summary", {"content": content}) for content in state["contents"]

]

def collect_summaries(state: OverallState):

return {

"collapsed_summaries": [Document(summary) for summary in state["summaries"]]

}

async def _reduce(input: dict) -> str:

prompt = reduce_prompt.invoke(input)

response = await llm.ainvoke(prompt)

return response.content

# Add node to collapse summaries

async def collapse_summaries(state: OverallState):

doc_lists = split_list_of_docs(

state["collapsed_summaries"], length_function, token_max

)

results = []

for doc_list in doc_lists:

results.append(await acollapse_docs(doc_list, _reduce))

return {"collapsed_summaries": results}

# This represents a conditional edge in the graph that determines

# if we should collapse the summaries or not

def should_collapse(

state: OverallState,

) -> Literal["collapse_summaries", "generate_final_summary"]:

num_tokens = length_function(state["collapsed_summaries"])

if num_tokens > token_max:

return "collapse_summaries"

else:

return "generate_final_summary"

# Here we will generate the final summary

async def generate_final_summary(state: OverallState):

response = await _reduce(state["collapsed_summaries"])

return {"final_summary": response}

# Construct the graph

# Nodes:

graph = StateGraph(OverallState)

graph.add_node("generate_summary", generate_summary) # same as before

graph.add_node("collect_summaries", collect_summaries)

graph.add_node("collapse_summaries", collapse_summaries)

graph.add_node("generate_final_summary", generate_final_summary)

# Edges:

graph.add_conditional_edges(START, map_summaries, ["generate_summary"])

graph.add_edge("generate_summary", "collect_summaries")

graph.add_conditional_edges("collect_summaries", should_collapse)

graph.add_conditional_edges("collapse_summaries", should_collapse)

graph.add_edge("generate_final_summary", END)

app = graph.compile()

LangGraph 允许绘制图结构,以帮助可视化其功能:

from IPython.display import Image

Image(app.get_graph().draw_mermaid_png())

运行时,我们可以对图表进行流式处理,以观察其步骤顺序。下面,我们将简单地打印出步骤的名称。

请注意,由于图中存在循环,在执行时指定 recursion_limit 会很有帮助。当超过指定的限制时,它会引发一个特定的错误。

async for step in app.astream(

{"contents": [doc.page_content for doc in split_docs]},

{"recursion_limit": 10},

):

print(list(step.keys()))

['generate_summary']

['generate_summary']

['generate_summary']

['generate_summary']

['generate_summary']

['generate_summary']

['generate_summary']

['generate_summary']

['generate_summary']

['generate_summary']

['generate_summary']

['generate_summary']

['generate_summary']

['generate_summary']

['collect_summaries']

['collapse_summaries']

['collapse_summaries']

['generate_final_summary']

print(step)

{'generate_final_summary': {'final_summary': 'The consolidated summary of the main themes from the provided documents is as follows:\n\n1. **Integration of Large Language Models (LLMs) in Autonomous Agents**: The documents explore the evolving role of LLMs in autonomous systems, emphasizing their enhanced reasoning and acting capabilities through methodologies that incorporate structured planning, memory systems, and tool use.\n\n2. **Core Components of Autonomous Agents**:\n - **Planning**: Techniques like task decomposition (e.g., Chain of Thought) and external classical planners are utilized to facilitate long-term planning by breaking down complex tasks.\n - **Memory**: The memory system is divided into short-term (in-context learning) and long-term memory, with parallels drawn between human memory and machine learning to improve agent performance.\n - **Tool Use**: Agents utilize external APIs and algorithms to enhance problem-solving abilities, exemplified by frameworks like HuggingGPT that manage task workflows.\n\n3. **Neuro-Symbolic Architectures**: The integration of MRKL (Modular Reasoning, Knowledge, and Language) systems combines neural and symbolic expert modules with LLMs, addressing challenges in tasks such as verbal math problem-solving.\n\n4. **Specialized Applications**: Case studies, such as ChemCrow and projects in anticancer drug discovery, demonstrate the advantages of LLMs augmented with expert tools in specialized domains.\n\n5. **Challenges and Limitations**: The documents highlight challenges such as hallucination in model outputs and the finite context length of LLMs, which affects their ability to incorporate historical information and perform self-reflection. Techniques like Chain of Hindsight and Algorithm Distillation are discussed to enhance model performance through iterative learning.\n\n6. **Structured Software Development**: A systematic approach to creating Python software projects is emphasized, focusing on defining core components, managing dependencies, and adhering to best practices for documentation.\n\nOverall, the integration of structured planning, memory systems, and advanced tool use aims to enhance the capabilities of LLM-powered autonomous agents while addressing the challenges and limitations these technologies face in real-world applications.'}}

在相应的 LangSmith 跟踪 中,我们可以看到各个 LLM 调用是如何按节点分组的。

深入了解

自定义

- 如上所示,您可以为映射和归约阶段自定义 LLM 和提示。

实际用例

- 请参阅这篇博客文章中关于分析用户交互(关于 LangChain 文档的问题)的案例研究!

- 该博客文章及相关的 仓库 还介绍了聚类作为一种摘要方法。

- 这为除了

stuff或map-reduce方法之外的另一种值得考虑的路径打开了大门。

后续步骤

我们鼓励您查阅操作指南,以获取更多关于以下内容的详细信息:

和其他概念。